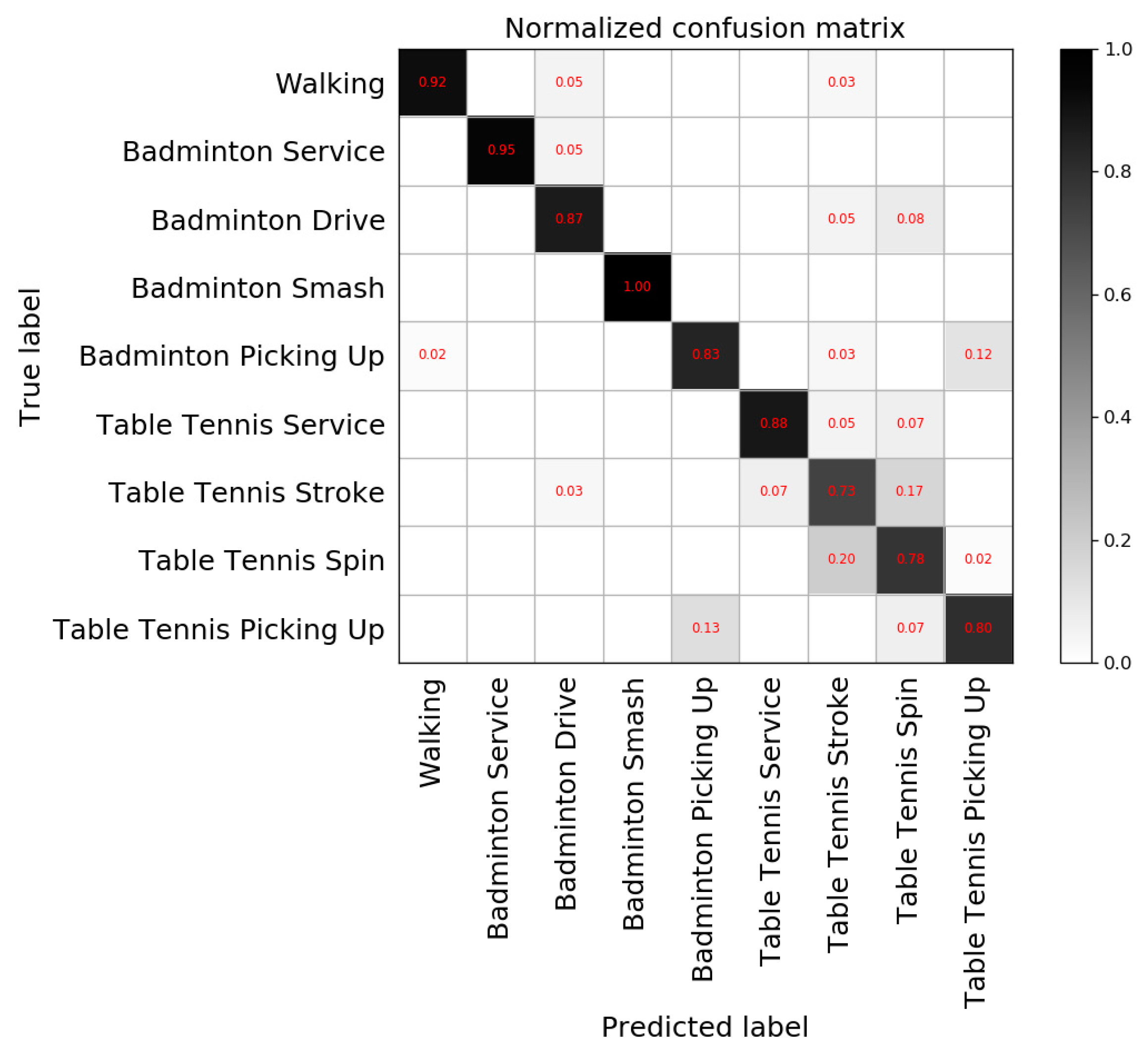

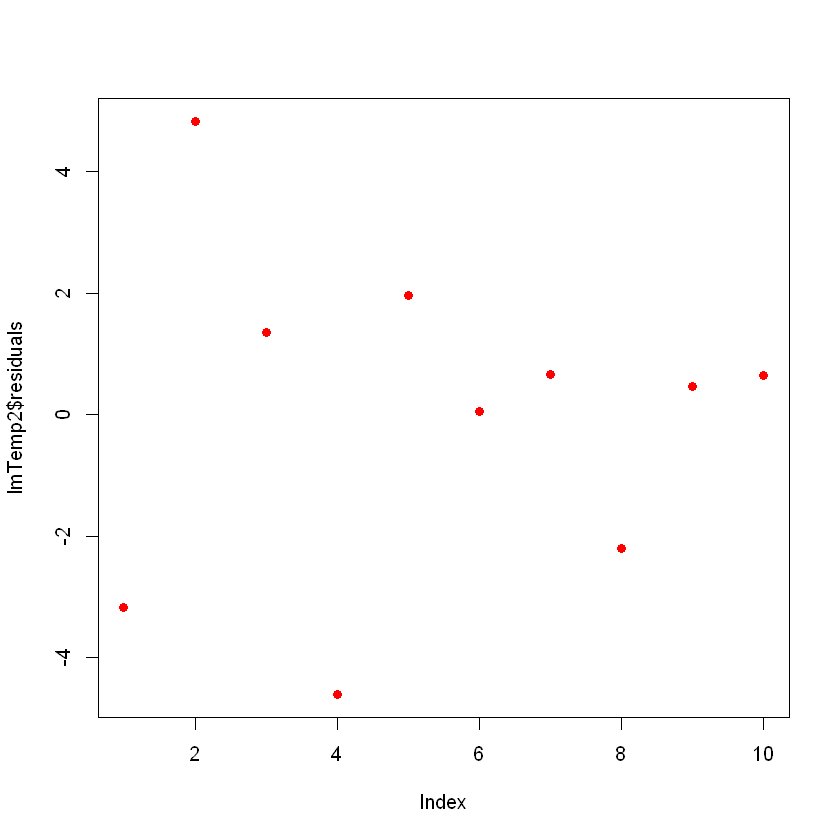

Checking with caret we confirm the sensitivity is 0.94 (per caret documentation the 'data' is the predicted values and 'reference' is the actual values) > caret. This is something that the CARET library does not have as a default and I have designed this to allow the confusion matrix outputs to be stored in a data frame or database, as many a time we want to track the ML outputs and fits over time to monitor feature slippage and changes in the underlying patterns of the data. By definition, the sensitivity (aka true positive rate) is: TPR TP/P TP / (TP + FN) Calculating by hand from confusion matrix above that would mean: TPR 492 / (492 + 29). The package aim is to make it easier to convert the outputs of the lists from caret and collapse these down into row-by-row entries, specifically designed for storing the outputs in a database or row by row data frame. The vignette is avaialble for easy reference and will allow you to understand the process step by step.

# install.packages("remotes") # if not already installed ) Arguments x an object of class confusionMatrix what data to convert to matrix. The package guidance, and installation, can be found on the GitHub site directly, but to install the package follow the below steps: library(ggplot) as.nfusionMatrix Confusion matrix as a table Description Conversion functions for class confusionMatrix Usage S3 method for class ’confusionMatrix’ as.matrix(x, what 'xtabs'. I hope you find it useful and read below to find out how to install it, work with it and get the most out of it. I have many more projects, tutorials and code snippets available on my GitHub site.

) Default S3 method: confusionMatrix( data, reference, positive NULL, dnn c('Prediction', 'Reference'), prevalence NULL, mode 'sensspec'. This is my third package I have managed to create. Calculates a cross-tabulation of observed and predicted classes with associated statistics. fold) cm % filter ( Resample = "Fold01" ) %>% conf_mat ( obs, pred ) cm #> Truth #> Prediction VF F M L #> VF 166 33 8 1 #> F 11 71 24 7 #> M 0 3 5 3 #> L 0 1 4 10 # Now compute the average confusion matrix across all folds in # terms of the proportion of the data contained in each cell.

Library ( dplyr ) data ( "hpc_cv" ) # The confusion matrix from a single assessment set (i.e.

0 kommentar(er)

0 kommentar(er)